September 12, 2024, might just be the date we remember as the turning point in artificial intelligence.

OpenAI—the minds behind technological behemoths like ChatGPT, GPT-3, DALL-E 2, and Sora—unveiled something even more groundbreaking: OpenAI o1, a large language model that doesn’t just simply generate responses; it thinks, ponders, reasons. This isn’t just another incremental upgrade. This is something no generative AI tool was able to do before. This is a paradigm shift that demands we rethink what artificial intelligence can do and what it means for our future.

What is special about OpenAI o1?

Although fairly new and still under works, it’s pretty obvious for anyone to tell that OpenAI o1 is not your typical generative AI. It thinks before it answers, aiming to reduce the hallucinations that have plagued earlier models.

Before OpenAI o1, the race in AI was about the size of the training data sets. The “bigger is better” paradigm. Models like GPT-2 were trained on 1.5 billion parameters, GPT-3 175 billion parameters, and GPT-4 a whopping 1.76 trillion parameters and they dominated the landscape. The focus was on pre-training models with vast amounts of data, hoping that sheer scale would equate to intelligence. However, despite their size, these models didn’t “think.” They were proficient masters in language, and they predicted the next word based on patterns learned during training, which often led to confident yet incorrect answers.

OpenAI o1 changes that. It’s designed to reason, not just regurgitate. It uses a “long internal chain of thought,” allowing it to process complex queries more like a human would. This is not just an upgrade but more like a revolution in the world of AI.

Is it still “generative” AI?

This brings us to a critical question: Is o1 still a generative AI? When a model starts reasoning, evaluating, and adjusting its responses based on nuanced input, it’s no longer simply generating. It’s conversing, analyzing, and problem-solving.

OpenAI o1 represents a hybrid shift. It still generates content but does so with deeper cognitive layers. It combines existing knowledge and applies critical thinking, marking an evolution into a more cognitively-aware model.

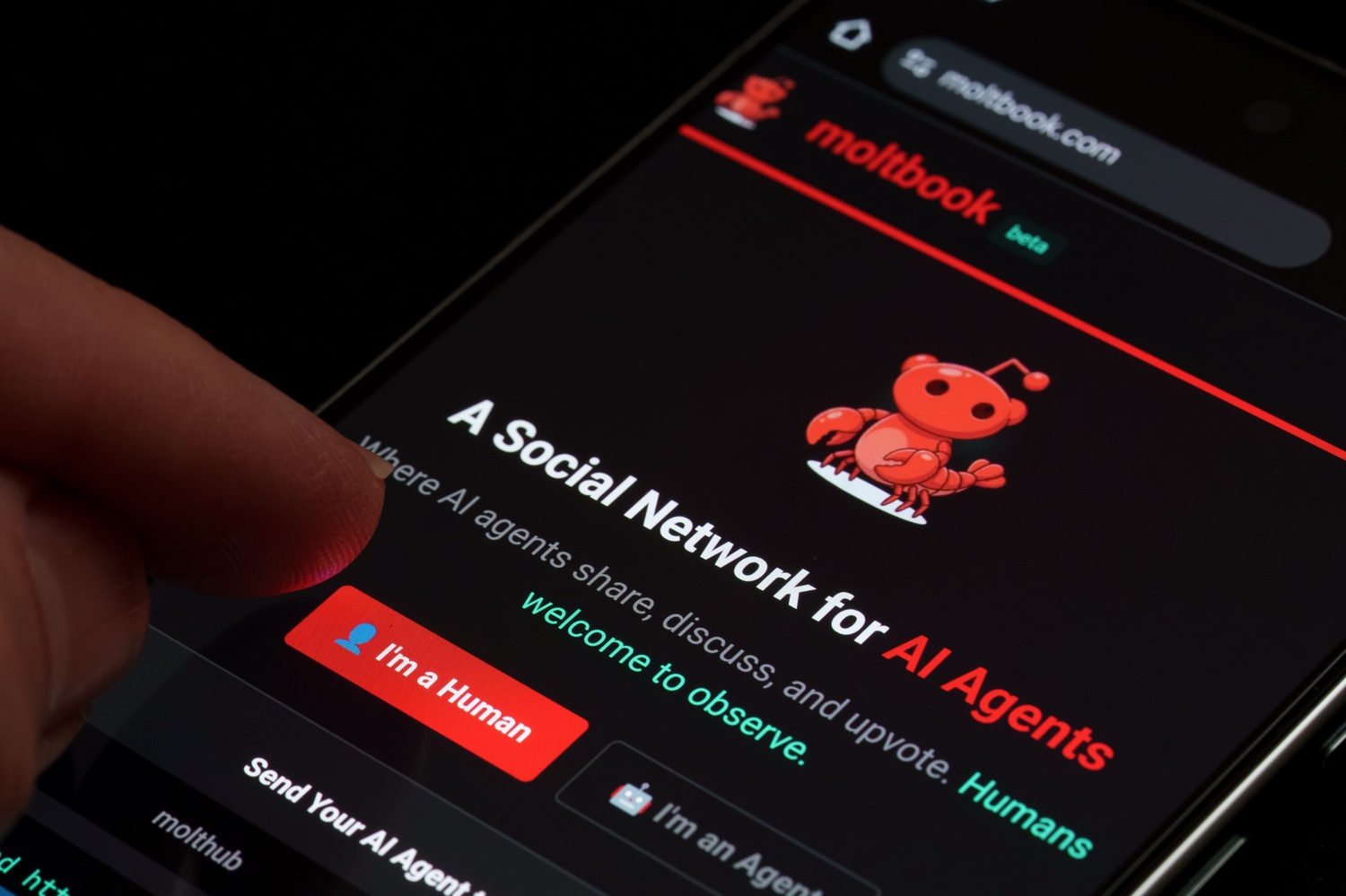

This shift from mere generation to reasoning raises one profound question: How do we interact with machines and models that claim that they can think? Our interaction with machines is set to transform dramatically. No longer are we dealing with tools that merely execute commands; we’re engaging with entities that can process, reason, and maybe even challenge our inputs down the line.

Imagine posing a complex business problem to an AI, and instead of an immediate (and possibly shallow, maybe incorrect) response, the AI asks clarifying questions, suggests alternative strategies, or points out potential flaws in your approach. The interaction becomes a dialogue, a collaboration of sorts that was possible only with fellow humans before.

Psychological and practical implications

We’re moving from simple command-response exchanges to engaging in conversations with machines capable of reasoning and challenging our perspectives. This shift raises a host of psychological and practical questions that we need to grapple with.

First off, how will we relate to AI models that can think? Trust becomes a crucial factor here. As these models start exhibiting human-like reasoning, will we place more faith in their judgments, or will we be more skeptical? There’s a thin line between leveraging AI for complex problem-solving and becoming overly reliant on it, potentially at the expense of our own critical thinking skills. We might find ourselves asking, “Am I making this decision or is the AI making it for me?”

Then there’s the matter of patience. We’re accustomed to instant gratification. Ask a question, get an immediate answer. But what happens when AI starts taking its time, deliberating over complex queries just as a human would? As AI systems become more independent in their reasoning, questions about accountability arise. If an AI advises a course of action that leads to unintended consequences, who bears the blame? Is it the developers, the users, or the AI itself? This ambiguity could lead to legal and moral dilemmas that society isn’t fully prepared to handle yet.

Moreover, as AI begins to mimic human thought processes, we might start to anthropomorphize these systems—which isn’t the best of ideas. We might begin attributing emotions and consciousness to machines that don’t possess them, at least not yet. This could have profound psychological effects. For instance, could forming emotional attachments to AI impact our relationships with other humans? It’s a scenario that, while speculative, isn’t entirely outside the realm of possibility. We’re already seeing people getting married to AI and starting virtual families.

Transparency becomes another significant issue. For us to trust these reasoning AI models, they need to be able to explain their thought processes. However, OpenAI does not disclose any of this information and hides the thought process because OpenAI claims o1 cannot be trained on any user preferences or policy compliance. If an AI provides a recommendation, understanding how it arrived at that conclusion is essential for us to assess its validity. Otherwise, we risk placing blind faith, which could be dangerous.

Impact on work, workplace, workforce

Unlike previous AI models, o1’s ability to think critically allows it to contribute meaningfully to complex decision-making processes within organizations. We have to consider how to prepare the workforce for such shifts, perhaps by emphasizing skills that complement AI rather than compete with it.

Change compels us to rethink the future of work and the roles we’ll play in an AI-enhanced landscape. o1’s reasoning capabilities blur the lines between tasks that require human intuition and those that can be automated. This shift challenges our traditional notions of job security and demands that we reevaluate how we prepare the workforce for the changes ahead.

One of the most significant impacts will be on job roles and employment patterns. Tasks involving complex problem-solving, analysis, and decision-making—once considered exclusive to human expertise—are now within o1’s reach. Roles in financial analysis, legal research, medical diagnostics, and even creative fields like content creation could be transformed.

The skills valued in the workforce will shift accordingly. There’ll be a greater emphasis on soft skills like emotional intelligence, critical thinking, adaptability, and complex problem-solving. Emotional intelligence becomes even more critical as we engage in tasks that require empathy, negotiation, and relationship-building. Being able to assess AI recommendations critically, question assumptions, and make informed judgments will be essential to ensure that AI serves as an effective tool rather than an unquestioned authority.

Workplace culture and policies will need to adapt too. Traditional metrics of productivity may become obsolete as AI takes over routine tasks. Organizations might need to develop new ways of assessing productivity that account for AI contributions. Flexible work arrangements could become more common as we focus on tasks requiring human insight and creativity, which might not fit into the traditional nine-to-five schedule.

The future of AI is no longer just about generating—it’s about thinking. And as we navigate this brave new world, one thing is clear: we’re not just building smarter machines; we’re redefining intelligence itself.

The journey has just begun, and where it leads is limited only by our imagination, or perhaps, by the imaginations of the very machines we’re creating.