For nearly thirty years, law enforcement has been complaining about end-to-end encryption. There’s an ongoing “going dark” debate, part of which examines how society and the tech industry can respect users’ privacy without hampering law enforcement’s ability to access data on users’ devices. Most recently, this “going dark” debate was hotly contested during the San Bernardino FBI v. Apple case.

In that encryption dispute between the FBI and Apple, U.S. courts debated whether it was lawful to compel Apple to unlock cell phones and help law enforcement access encrypted data. The legal fight between Apple and the FBI was never fully resolved, as federal agents were able to access the phones via a third party, which left the legal matter up in the air.

Nevertheless, the “going dark” debate is dominating news headlines again, as Apple recently proposed significant changes to its operating system (client-side scanning) in the fight against child sexual abuse material (CSAM). This is a laudable endeavor; however, it is imperative that such an initiative does not compromise civil rights and national security.

Unfortunately, according to a group of computer scientists, engineers, and internationally renowned policy experts, Apple’s client-side scanning (CSS) initiative would cause more harm than good. Apple has decided to table this client-side scanning, CSAM-detection system—at least for the time being.

It’s worth noting that the scholars who wrote last month’s paper, Bugs in our Pockets: The Risks of Client-Side Scanning, were concerned about CSS long before Apple’s initiative. According to Ross Anderson, a Cambridge University professor, the scholars began their research on CSS in 2018 after hearing that British intelligence (GCHQ) was planning to use CSS as a way to get around the end-to-end encryption in WhatsApp. According to Anderson, the GCHQ was exploring technical options to fight CSAM and terrorism recruitment. Worried that this CSS system wouldn’t be deployed safely from a design, legal, and policy standpoint, the scholars were galvanized.

However, before we get into why client-side scanning is nefarious…

What is client-side scanning?

CSS is the scanning of content on a user’s mobile device or tablet prior to encryption (or after decryption). Although Apple’s recent initiative was well-intentioned, it set off security and data privacy alarm bells.

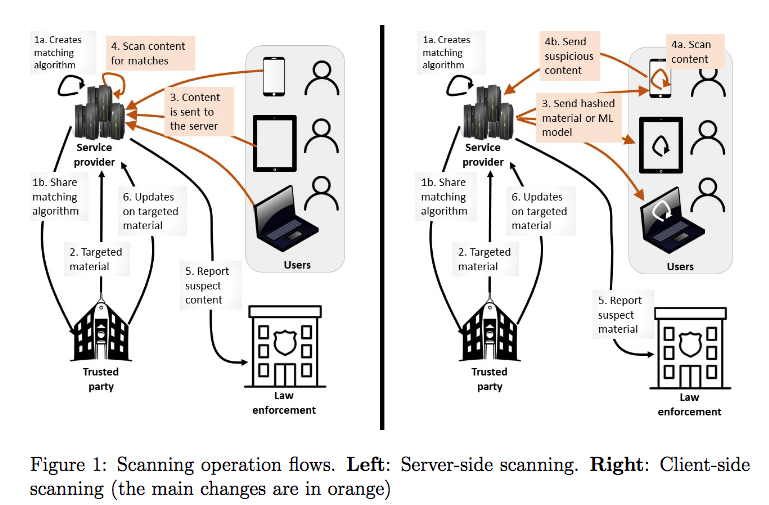

Currently, most tech companies use server-side scanning, whereby the scanning process runs on tech companies’ own servers. Apple already scans all images uploaded to iCloud servers, and any questionable material is forwarded to the appropriate authorities. However, in the case of CSS, Apple would use machine learning and perceptual hashing (whereby the photos are converted to unique hashes), and all of the scanning would take place on the users’ devices before encryption. In Figure 1 (below), the flow on the right depicts CSS, and flow on the left depicts server-side scanning.

Figure 1: Difference between server-side scanning and CSS.

Source: Bugs in our Pockets: the Risks of Client-Side Scanning.

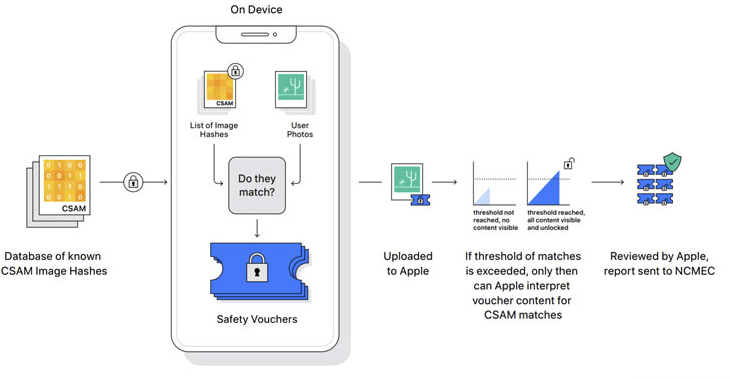

Through Apple’s proposed system, images are analyzed by an on-device machine learning algorithm to determine if any images match a database of CSAM images. Again, it’s important to note that Apple already checks for such images on the server side, as does Dropbox, Facebook, Google, Microsoft, and Twitter. The difference here is that the scanning would now be performed on the devices of all users, all the time.

Figure 2: Apple’s proposed client-side scanning process for shared images.

Source: Lakshmanan, Ravie. The Hacker News. August 6, 2021. “Apple to scan every device for child abuse content—but experts fear for privacy.”

Proponents of CSS

CSS evangelists believe that the system is a solution to the long-standing encryption versus public safety debate. CSS doesn’t provide law enforcement with backdoor keys; nor does it weaken encryption because all of the scanning is done before the content is encrypted. Images are only flagged and sent to the authorities if they match the corpus of images that the system is trained on. Down the line, proponents see CSS as an effective way to uncover CSAM, terrorist recruitment materials, animal abuse, and a host of other materials.

All that said, the issues lie within CSS’ efficacy; as well as its lack of transparency, and its ability to be repurposed by law enforcement, intelligence agencies, and bad actors. We’ll discuss each of these issues in turn.

Issue 1: Efficacy

According to scholars, CSS is less effective in adversarial environments. Through evasion attacks and data poisoning, bad actors can bypass a CSS system through false negatives, whereby targeted content is passed; alternatively, they can disturb the model via false positives, where a great deal of innocuous content is flagged as being problematic. To be sure, these types of attacks are not new, but CSS brings a new distinctive advantage for the bad actors: they have direct access to the devices being scanned. As the “Bugs in our Pockets” authors explain,

“The adversary can use its access to the device to reverse engineer the mechanism. As an example, it took barely two weeks for the community to reverse engineer the version of Apple’s NeuralHash algorithm already present in iOS 14, which led to immediate breaches….”

Issue 2: Lack of transparency

As is the case with most machine learning systems, most CSS systems are not auditable by the public. There is no way for the public to oversee, audit, or otherwise evaluate the CSS system. This technology can be repurposed into a mass surveillance tool, and the opacity of a mobile OS makes it difficult, if not impossible, to ascertain what is actually being scanned. In short, there is little in the way of transparency.

In a piece for the Brooking Institute’s Lawfare Blog, Susan Landau, Bridge Professor in the Fletcher School of Engineering at Tufts University, writes,

“Such [CSS] systems are nothing less than bulk surveillance systems launched on the public’s personal devices.”

Issue 3: Expansion of scope

In this author’s opinion, this is the main issue. Government agencies, such as the FBI, want to do more than just scan for CSAM. European and American entities have already demonstrated that they are interested in using CSS for a litany of things, such as creating systems that can identify organized crime, terrorist activity, fraud, tax evasion, and insider trading.

The problem lies in the potential to repurpose the CSS system. Landau writes,

“Currently designed to scan for CSAM, there is little that prevents such systems from being repurposed to scan for other types of targeted content, whether it’s embarrassing personal photos or sensitive political or business discussions.”

Self-proclaimed “Apple Holic” Jonny Evans agrees that the repurposing of CSS systems is a major issue. In a recent article for Computerworld, Evans writes, “Effectively, once such a system is put in place, it’s only a matter of time until criminal entities figure out how to undermine it, extending it to detect valuable personal or business data, or inserting false positives against political enemies.”

Empowered with a CSS system, what is to stop law enforcement, or other actors, from searching for whatever type of data they want to find on a user’s device? Theoretically, we would have to rely on lawmakers and Big Tech companies to limit the scope of surveillance from expanding.

As “The Bugs in our Pockets” scholars note,

“Only policy decisions prevent the scanning expanding from illegal abuse to other material of interest to governments; and only the lack of a software update prevents the scanning expanding from static images to content stored in other formats, such as voice, text, or video.”

CSS should be treated like wiretapping

The problem with many CSS systems, like the one recently proposed by Apple, is that they are placed on everyone’s devices by default. This is a seismic shift. It’s a move from targeted surveillance of suspected criminals to the scanning of everyone’s private data all the time—all without a warrant or suspicion.

According to John Naughton, an Irish academic and professor of public understanding of technology at Open University, CSS should be treated in the same legal manner as wiretapping. After all, CSS systems would give governments access to users’ private content; thus, such access should require wiretaps. The reason we have wiretap laws in the first place is specifically to make it more difficult for governments to infringe upon civilians’ privacy.

With CSS, government agencies would no longer need to acquire a warrant and physically access a user’s device. CSS would make it exponentially cheaper for governments to scan files on devices. After all, wiretaps aren’t cheap; the average cost of a wiretap in 2020 was $119,000. This would be a drastic change, and not for the better.

Conclusion

As “The Bugs in Our Pockets” scholars summarize,

“The introduction of [CSS] on our personal devices—devices that keep information from to-do notes to texts and photos from loved ones—tears at the heart of privacy of individual citizens. Such bulk surveillance can result in a significant chilling effect on freedom of speech and, indeed, on democracy itself.”

As a society we definitely need to do everything in our power to help law enforcement find bad actors; however, not if the solution turns our cell phones into mass surveillance devices. As Apple likely realized before postponing its initiative, its CSS system was not appropriate in its current form. After all, any solution that violates our civil rights and national security isn’t really a solution at all.